Xu Li, CEO of SenseTime: AI Is Breaking the Dimensional Wall between the Virtual World and the Real World

Last week, the SenseTime AI Forum held at the 2021 World Artificial Intelligence Conference (WAIC) gathering big shots in the industry received many inspiring opinions on technology, science fiction, humanities, and governance, and brought a majestic storm of ideas to the participants.

Last week, the SenseTime AI Forum held at the 2021 World Artificial Intelligence Conference (WAIC) gathering big shots in the industry received many inspiring opinions on technology, science fiction, humanities, and governance, and brought a majestic storm of ideas to the participants. At the forum, Xu Li, Co-founder and CEO of SenseTime, delivered a keynote speech entitled “AI Is Breaking the Dimensional Wall between the Virtual World and the Real World”, in which he analyzed the core value of the SenseCorein detail and intuitively interpreted the “password” connecting the virtual world and the real world.

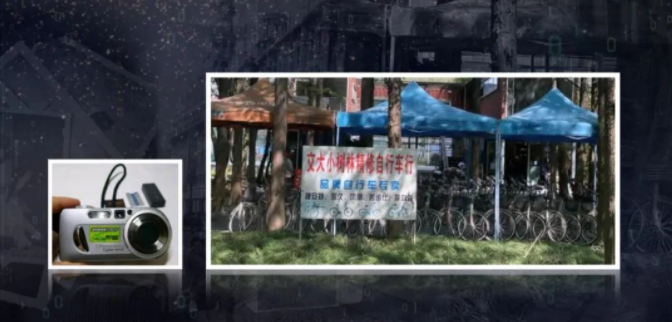

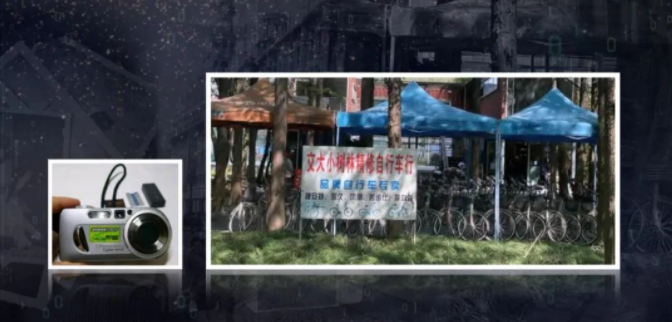

Xu Li believes that SenseCore will be able to truly reduce the price of AI production factors and promote the comprehensive digital transformation of the physical world. SenseTime is committed to seamlessly integrating the virtual world and the real world, and through SenseCore and various AI technology platforms, digitizing the real world and moving it to the virtual world while projecting the virtual world into the real world to break the dimensional wall of AI. The following is the content of Xu Li's speech shared by SenseTime:Three steps to digital transformation Today, I want to talk about a lighter topic, namely how can AI help us shuttle between the virtual world and the real world? Our real world is undergoing a process of digitalization to digitize various things in the real world and move them into the virtual digital world while projecting the content produced in the digital world into the real world through different carriers. First, what is digitization? I think of my first digital camera bought more than20 years ago, Olympus, with 2 million pixels. I was very happy then, and used it to take photos everywhere in my university. I thought it was digital. After all, it is called a digital camera. I remember I had taken a photo of a bicycle repair shop called “SJTU Grove Elf Bicycle Repair Shop” in the university at that time, which was very distinctive. Recently, when I visited the university, I found that the repair shop was still there with the original name and signboard used for more than 20 years.

Xu Li believes that SenseCore will be able to truly reduce the price of AI production factors and promote the comprehensive digital transformation of the physical world. SenseTime is committed to seamlessly integrating the virtual world and the real world, and through SenseCore and various AI technology platforms, digitizing the real world and moving it to the virtual world while projecting the virtual world into the real world to break the dimensional wall of AI. The following is the content of Xu Li's speech shared by SenseTime:Three steps to digital transformation Today, I want to talk about a lighter topic, namely how can AI help us shuttle between the virtual world and the real world? Our real world is undergoing a process of digitalization to digitize various things in the real world and move them into the virtual digital world while projecting the content produced in the digital world into the real world through different carriers. First, what is digitization? I think of my first digital camera bought more than20 years ago, Olympus, with 2 million pixels. I was very happy then, and used it to take photos everywhere in my university. I thought it was digital. After all, it is called a digital camera. I remember I had taken a photo of a bicycle repair shop called “SJTU Grove Elf Bicycle Repair Shop” in the university at that time, which was very distinctive. Recently, when I visited the university, I found that the repair shop was still there with the original name and signboard used for more than 20 years. Initially, most bicycles repaired there were manufactured by Phoenix and Forever, both of which are memories of that era. At that time, we felt that recording with digital cameras, recording pens, DV, and other digital devices was digitization. But when I look at this photo again today, it seems that I can't do anything with it except recall the past. I just “pixilated” the photo. Digital transformation is a tool. Speaking of it, what are we talking about? I understand that the digitalization we are talking about aims to building a digital world, and the access, search, and operation performed on the world can directly affect the real physical world. The building of such a digital world usually requires to go through several steps: Step 1: Digitization of scenarios, i.e. pixelation and 3Dization we are familiar with. Many of our big data applications in the past have completed the first step of digitization. However, if the data is not combined with the real business process, the effect of pure digitization is limited. Step 2: Structuralization of elements, i.e. extract the meta-elements that are meaningful to human beings based on the perception and understanding of a large amount of data obtained through digitization. Step 3: Interactivity of processes.We often hear about process re-engineering. In the process of digitization, the process must first be interactive — interaction with humans or further upgraded to a machine decision-making process. The building of a digital world with business value allows us to directly use such data to search and influence the real process. Based on the interactive process, business process reshaping and automation can be achieved, which is also a core capability of the SenseFoundry/SenseFoundry Enterprise platform. Let's take the SenseTime Technology Building in Shanghai as an example.

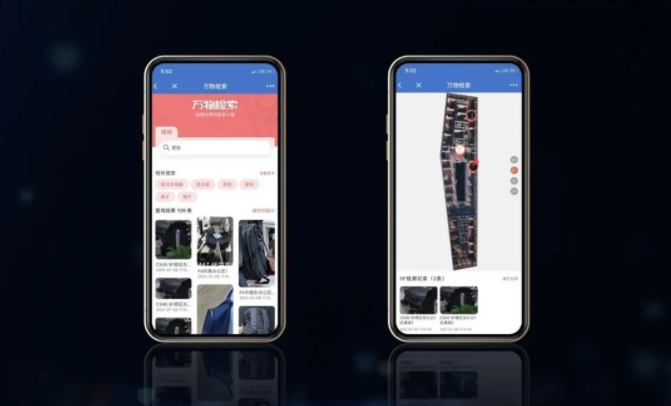

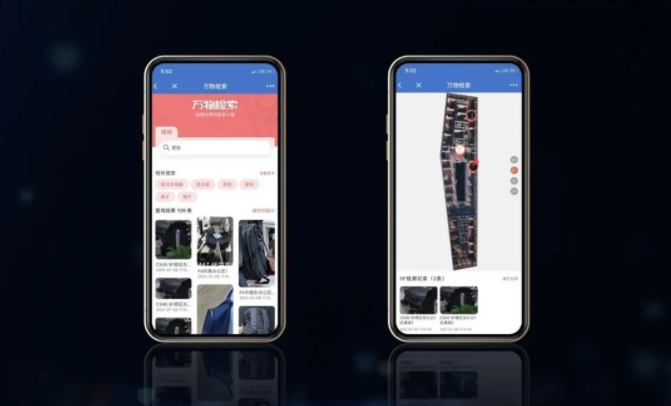

Initially, most bicycles repaired there were manufactured by Phoenix and Forever, both of which are memories of that era. At that time, we felt that recording with digital cameras, recording pens, DV, and other digital devices was digitization. But when I look at this photo again today, it seems that I can't do anything with it except recall the past. I just “pixilated” the photo. Digital transformation is a tool. Speaking of it, what are we talking about? I understand that the digitalization we are talking about aims to building a digital world, and the access, search, and operation performed on the world can directly affect the real physical world. The building of such a digital world usually requires to go through several steps: Step 1: Digitization of scenarios, i.e. pixelation and 3Dization we are familiar with. Many of our big data applications in the past have completed the first step of digitization. However, if the data is not combined with the real business process, the effect of pure digitization is limited. Step 2: Structuralization of elements, i.e. extract the meta-elements that are meaningful to human beings based on the perception and understanding of a large amount of data obtained through digitization. Step 3: Interactivity of processes.We often hear about process re-engineering. In the process of digitization, the process must first be interactive — interaction with humans or further upgraded to a machine decision-making process. The building of a digital world with business value allows us to directly use such data to search and influence the real process. Based on the interactive process, business process reshaping and automation can be achieved, which is also a core capability of the SenseFoundry/SenseFoundry Enterprise platform. Let's take the SenseTime Technology Building in Shanghai as an example. In the first step, we reconstruct the entire building in 3D, make a digital twin of the physical space, and simultaneously superimpose the sensor data on the digital twin space. But this step is just an action of digitization. Based on this step, we structuralize people, objects, events, and fields through perception and recognition and add semantic elements to the digital world to make it operable. Next, we put more business processes into this operating system to make the management of the entire building interactive and smarter. A series of office processes such as off-peak queuing for dining, conference room reservation, automatic food delivery, electronic lockers, and lost and found service can be re-engineered after the overall digitization and structuralization. For example, in the SenseTime smart office system based on SenseFoundry Enterprise and other platforms, there is a function called “Search Everything” that uses offline search engines to search for everything, and car keys and laptops lost in public areas can be searched with the function.

In the first step, we reconstruct the entire building in 3D, make a digital twin of the physical space, and simultaneously superimpose the sensor data on the digital twin space. But this step is just an action of digitization. Based on this step, we structuralize people, objects, events, and fields through perception and recognition and add semantic elements to the digital world to make it operable. Next, we put more business processes into this operating system to make the management of the entire building interactive and smarter. A series of office processes such as off-peak queuing for dining, conference room reservation, automatic food delivery, electronic lockers, and lost and found service can be re-engineered after the overall digitization and structuralization. For example, in the SenseTime smart office system based on SenseFoundry Enterprise and other platforms, there is a function called “Search Everything” that uses offline search engines to search for everything, and car keys and laptops lost in public areas can be searched with the function. After opening it, we can see the highly frequent words searched by our colleagues. There are two highlighted words: “lantern” and “pot”. I'm curious why some colleagues are looking for a pot in the office. If you search a backpack, you can see backpacks in the public area, which are marked on the 3D map.

After opening it, we can see the highly frequent words searched by our colleagues. There are two highlighted words: “lantern” and “pot”. I'm curious why some colleagues are looking for a pot in the office. If you search a backpack, you can see backpacks in the public area, which are marked on the 3D map. When we can empower more scenarios with intelligence, all daily processes will no longer require human intervention.▎Why do you need SenseCore? The steps of digital transformation including the digitalization of scenarios and the interactivity of processes are more explicit, one is connected to the input end, and the other is connected to the business end. However, the structuralization of semantic elements is the key to connecting both ends, and it is also the link that truly requires large-scale AI empowerment. Since a large number of long-tail elements in various scenarios need to be structuralized, we must achieve breakthroughs in core technologies. At present, 80% of structuralized applications are low-frequency and long-tail scenarios. If there is no general-purpose AI, we have to invest a large amount of manpower in a single project, and even so, such problems that essentially involve small data and small samples cannot be well solved. In addition, if the technology is still focused on a single problem-solving process, it will be difficult to have a good generalization capability in many general scenarios, resulting in unstable performance. A general-purpose ultra-large-scale model combined with the subdivision and optimization of a single scenario with small samples becomes the core to solve production cost and precision problems, which leads to the essential demand for AI computing power. Ten years ago, we only saw the benefits brought about by the large-scale application of AI deep learning algorithms; and five years ago, we could see the benefits brought about by industry big data breaking through the industrial red line for single problems. Next, we may see the benefits brought by AI supercomputing, which will help everyone explore a larger solution space. In a common sense, the more precise the algorithm, the less computing power may be required. However, the demand of the best AI algorithm for computing power in the past 10 years has increased by almost 1 million times, which shows that we are exploring unknown solution spaces. More computing power needs to be added only when the search space becomes larger. In 2017, SenseTime began to explore big data-based deep neural networks and the optimization of parallel computing power, and launched the research on SenseCore. SenseCore is analogous to a particle collider in high-energy physics, which helps explore new laws and new types of quantum particles through the high-speed collision of two random beams of particles. The results of particle collisions are unpredictable, but only through continuous exploration and experimentation can it be possible to find out some laws that can truly explain the physical world. Similarly, when we explore the potentials of AI, the design of many general-purpose models is actually a resource adventure. It is necessary to try different and super-large models to achieve an excellent generalization capability through continuous iteration, so we call it SenseCore.

When we can empower more scenarios with intelligence, all daily processes will no longer require human intervention.▎Why do you need SenseCore? The steps of digital transformation including the digitalization of scenarios and the interactivity of processes are more explicit, one is connected to the input end, and the other is connected to the business end. However, the structuralization of semantic elements is the key to connecting both ends, and it is also the link that truly requires large-scale AI empowerment. Since a large number of long-tail elements in various scenarios need to be structuralized, we must achieve breakthroughs in core technologies. At present, 80% of structuralized applications are low-frequency and long-tail scenarios. If there is no general-purpose AI, we have to invest a large amount of manpower in a single project, and even so, such problems that essentially involve small data and small samples cannot be well solved. In addition, if the technology is still focused on a single problem-solving process, it will be difficult to have a good generalization capability in many general scenarios, resulting in unstable performance. A general-purpose ultra-large-scale model combined with the subdivision and optimization of a single scenario with small samples becomes the core to solve production cost and precision problems, which leads to the essential demand for AI computing power. Ten years ago, we only saw the benefits brought about by the large-scale application of AI deep learning algorithms; and five years ago, we could see the benefits brought about by industry big data breaking through the industrial red line for single problems. Next, we may see the benefits brought by AI supercomputing, which will help everyone explore a larger solution space. In a common sense, the more precise the algorithm, the less computing power may be required. However, the demand of the best AI algorithm for computing power in the past 10 years has increased by almost 1 million times, which shows that we are exploring unknown solution spaces. More computing power needs to be added only when the search space becomes larger. In 2017, SenseTime began to explore big data-based deep neural networks and the optimization of parallel computing power, and launched the research on SenseCore. SenseCore is analogous to a particle collider in high-energy physics, which helps explore new laws and new types of quantum particles through the high-speed collision of two random beams of particles. The results of particle collisions are unpredictable, but only through continuous exploration and experimentation can it be possible to find out some laws that can truly explain the physical world. Similarly, when we explore the potentials of AI, the design of many general-purpose models is actually a resource adventure. It is necessary to try different and super-large models to achieve an excellent generalization capability through continuous iteration, so we call it SenseCore. SenseCore is divided into three layers and nine modules. The first layer is the computing power layer, including chips for computing training, AI data centers (AIDC), and underlying sensors. The “Artificial Intelligence Computing Power Industry Ecological Alliance (ICPA)” jointly formed by SenseTime, Tsinghua University, Fudan University, Shanghai Jiaotong University, the China Academy of Information and Communications Technology, and industry partners will solve problems from scratch, improve software and hardware integration, and optimize algorithms. Currently, SenseTime's Artificial Intelligence Data Center (AIDC) is planned to have a total computing power of 3740 petaFLOPS (1 petaFLOP is equal to 1 quadrillion floating-point operations per second). The second layer is the platform layer. Above the computing power layer, we must have a software platform, including a data platform, a training framework, an acceleration module, and a model production platform. The data platform is easy to understand and mainly used for data storage, annotation, and encryption; the training framework is a deep learning training framework named SenseParrots self-developed by SenseTime;; the acceleration module is the high-performance computing engine of SenseTime named SensePPL which enables us to better use distributed data and hardware acceleration; and with a general-purpose foundation model, we can derive various small sample models which can be produced efficiently through the model production platform. The third layer is the algorithm layer, which provides usable algorithm modules. Many scenarios have reusable modules. When some problems do not need to be solved repeatedly, you can directly get the required algorithms from the toolbox. SenseTime's algorithm toolbox includes 17,000 algorithm models. In addition to the toolbox, SenseTime has also integrated some SOTA algorithms into the OpenMMLabsystem and has gained 37,000stars on GitHub. The algorithms on this platform have also accumulated best practices in use, allowing everyone to start from scratch.

SenseCore is divided into three layers and nine modules. The first layer is the computing power layer, including chips for computing training, AI data centers (AIDC), and underlying sensors. The “Artificial Intelligence Computing Power Industry Ecological Alliance (ICPA)” jointly formed by SenseTime, Tsinghua University, Fudan University, Shanghai Jiaotong University, the China Academy of Information and Communications Technology, and industry partners will solve problems from scratch, improve software and hardware integration, and optimize algorithms. Currently, SenseTime's Artificial Intelligence Data Center (AIDC) is planned to have a total computing power of 3740 petaFLOPS (1 petaFLOP is equal to 1 quadrillion floating-point operations per second). The second layer is the platform layer. Above the computing power layer, we must have a software platform, including a data platform, a training framework, an acceleration module, and a model production platform. The data platform is easy to understand and mainly used for data storage, annotation, and encryption; the training framework is a deep learning training framework named SenseParrots self-developed by SenseTime;; the acceleration module is the high-performance computing engine of SenseTime named SensePPL which enables us to better use distributed data and hardware acceleration; and with a general-purpose foundation model, we can derive various small sample models which can be produced efficiently through the model production platform. The third layer is the algorithm layer, which provides usable algorithm modules. Many scenarios have reusable modules. When some problems do not need to be solved repeatedly, you can directly get the required algorithms from the toolbox. SenseTime's algorithm toolbox includes 17,000 algorithm models. In addition to the toolbox, SenseTime has also integrated some SOTA algorithms into the OpenMMLabsystem and has gained 37,000stars on GitHub. The algorithms on this platform have also accumulated best practices in use, allowing everyone to start from scratch. The perfect integration of the computing power layer, the platform layer, and the algorithm layer gives birth to SenseCore, which can truly reduce the price of AI production factors and promote the comprehensive digital transformation of the physical world. What can we do with SenseCore? Here are some examples:

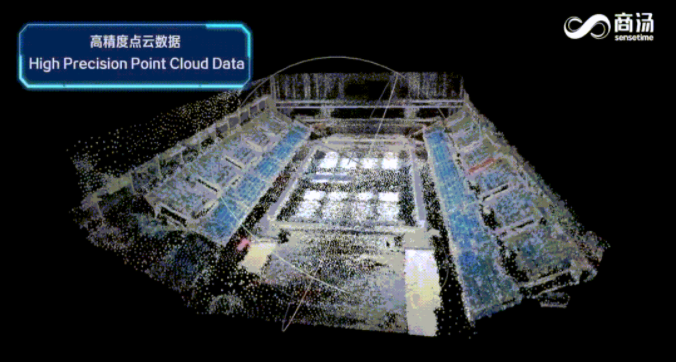

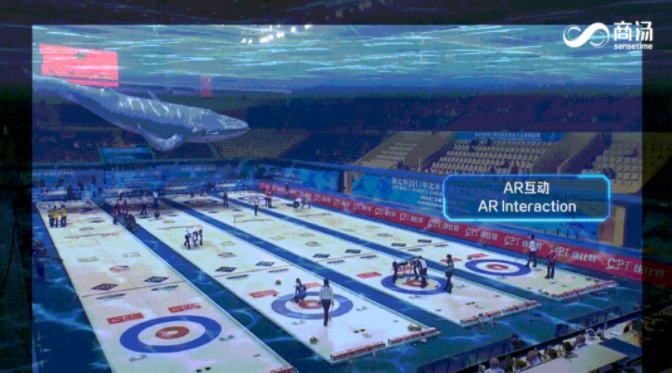

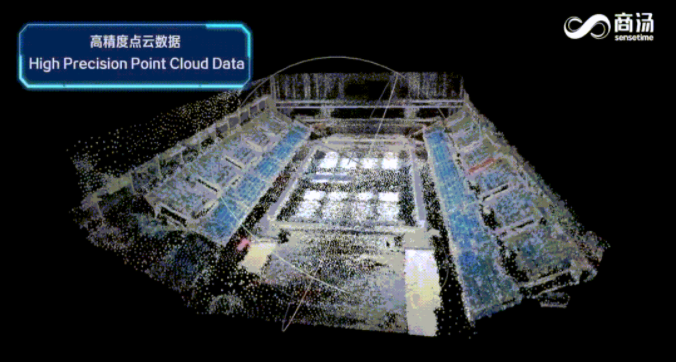

The perfect integration of the computing power layer, the platform layer, and the algorithm layer gives birth to SenseCore, which can truly reduce the price of AI production factors and promote the comprehensive digital transformation of the physical world. What can we do with SenseCore? Here are some examples: This is the venue for the Winter Olympics, Water Cube. The first step is to reconstruct its 3D structure through the digitalization of scenarios. The second step is to structuralize all the people, events, and scenarios in the venue, and then you can really understand what happens here.

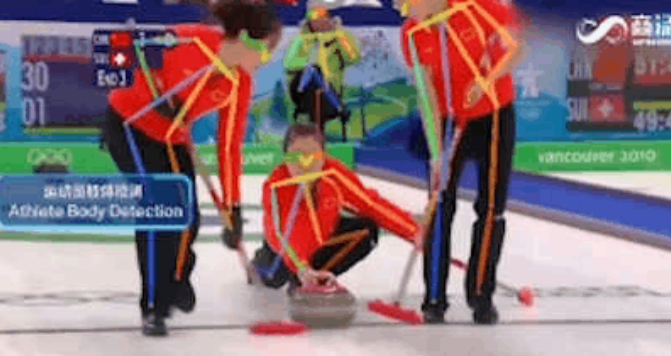

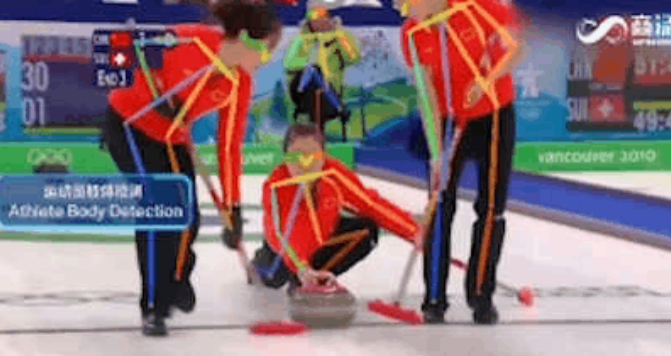

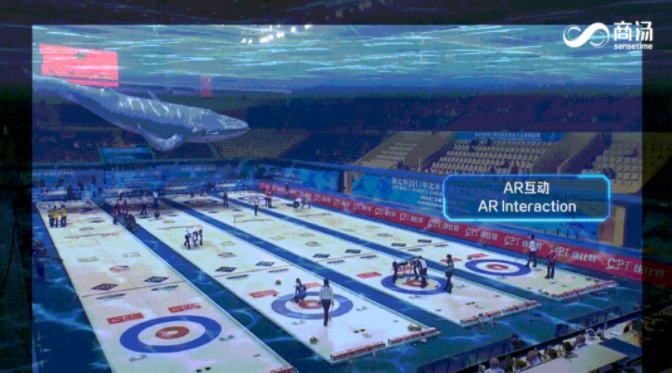

This is the venue for the Winter Olympics, Water Cube. The first step is to reconstruct its 3D structure through the digitalization of scenarios. The second step is to structuralize all the people, events, and scenarios in the venue, and then you can really understand what happens here. It's more about motion posture, trajectory, etc. Taking curling as an example, we can let the machine understand the trajectory of the curling stone, the joint movements of athletes when they push the curling stone, and other related content. The third step is to make the process interactive. Based on the above-mentioned structuralized information, whether it is the coach's analysis of the athletes or the audience's interaction, it can be iterated. We can see the superposition of the entire 3D content information while watching the game, and even the algorithm can make better trajectory predictions and complete surreal interactions.

It's more about motion posture, trajectory, etc. Taking curling as an example, we can let the machine understand the trajectory of the curling stone, the joint movements of athletes when they push the curling stone, and other related content. The third step is to make the process interactive. Based on the above-mentioned structuralized information, whether it is the coach's analysis of the athletes or the audience's interaction, it can be iterated. We can see the superposition of the entire 3D content information while watching the game, and even the algorithm can make better trajectory predictions and complete surreal interactions. Let's look at another common scenario in our daily life — the escalator. Escalator safety has always been a very important issue, because the escalator is a completely open environment. After we digitally reconstruct the physical information of the entire escalator, we can define a variety of abnormal scenarios on it, including falling, going backwards, pushing a wheelchair, pulling a suitcase, etc., and these are all structuralized elements that can be connected with the data model at the semantic level.

Let's look at another common scenario in our daily life — the escalator. Escalator safety has always been a very important issue, because the escalator is a completely open environment. After we digitally reconstruct the physical information of the entire escalator, we can define a variety of abnormal scenarios on it, including falling, going backwards, pushing a wheelchair, pulling a suitcase, etc., and these are all structuralized elements that can be connected with the data model at the semantic level. With this connection, we can re-engineer and automate all processes from abnormality detection and reporting escalation to solving problems, such as automatically reducing the speed of the escalator. In the whole process, only the step of solving problems still needs manual intervention. For example, if someone goes backwards, it needs the administrator's intervention.Let the virtual world shine into reality After talking about the physical world in reality, let's go back to this photo.

With this connection, we can re-engineer and automate all processes from abnormality detection and reporting escalation to solving problems, such as automatically reducing the speed of the escalator. In the whole process, only the step of solving problems still needs manual intervention. For example, if someone goes backwards, it needs the administrator's intervention.Let the virtual world shine into reality After talking about the physical world in reality, let's go back to this photo. I have been thinking about why this bicycle repair shop can last in the university for more than 20years. I try to use AI to analyze it. I typed the shop name into the Smart Translator and it really told me the truth. The translator translates it as follows: The woods are repairing bicycles, thinking that the woods themselves are repairing bicycles.

I have been thinking about why this bicycle repair shop can last in the university for more than 20years. I try to use AI to analyze it. I typed the shop name into the Smart Translator and it really told me the truth. The translator translates it as follows: The woods are repairing bicycles, thinking that the woods themselves are repairing bicycles.

Then I read the signboard again, and found that I segmented the sentence incorrectly for so many years. Why is the brand evergreen? Because it's called “SJTU Grove Elf Bicycle Repair Shop”. It is the Grove Elf who repair bicycles. Now that I know the truth, I need to tell my classmates. I want to project the Grove Elf into the real world. If I want to present this“Grove Elf”, I need to superimpose the digital virtual world on the real scenario.

Then I read the signboard again, and found that I segmented the sentence incorrectly for so many years. Why is the brand evergreen? Because it's called “SJTU Grove Elf Bicycle Repair Shop”. It is the Grove Elf who repair bicycles. Now that I know the truth, I need to tell my classmates. I want to project the Grove Elf into the real world. If I want to present this“Grove Elf”, I need to superimpose the digital virtual world on the real scenario. This is another channel to superimpose the virtual world on the real world. SenseTime has created an enterprise-level platform for this purpose, which can connect a large number of basic hardware facilities, including mobile terminals, loT devices, and AR/MR glasses. In addition, we provide integrated solutions for many real-world scenarios, including smart venues, scenic spots, museums, playgrounds, large supermarkets, airport transportation hubs, etc. We call this infrastructure platform SenseMARS, and we can use it to discover different starry skies.

This is another channel to superimpose the virtual world on the real world. SenseTime has created an enterprise-level platform for this purpose, which can connect a large number of basic hardware facilities, including mobile terminals, loT devices, and AR/MR glasses. In addition, we provide integrated solutions for many real-world scenarios, including smart venues, scenic spots, museums, playgrounds, large supermarkets, airport transportation hubs, etc. We call this infrastructure platform SenseMARS, and we can use it to discover different starry skies. Now, let’s invite an expert from the virtual world for a live interview. Welcome Gongsun Li from Honor of Kings, a pro living in Metaverse.

Now, let’s invite an expert from the virtual world for a live interview. Welcome Gongsun Li from Honor of Kings, a pro living in Metaverse. To our surprise, Li turns out to be a boy. Actually, there are Li 1, Li 2, and so on. This interview just shows a capability of the SenseMARS platform, through which we can drive virtual characters to complete various interactions and changes. Thanks to such a platform, it is achievable for thousands of different people to share the same visage and engage with the outside world on the same customer service interface. SenseTime is committed to building infrastructures for hybrid reality. Our SenseMARS adapts to various terminals, and even supports the connection with applets and browsers.

To our surprise, Li turns out to be a boy. Actually, there are Li 1, Li 2, and so on. This interview just shows a capability of the SenseMARS platform, through which we can drive virtual characters to complete various interactions and changes. Thanks to such a platform, it is achievable for thousands of different people to share the same visage and engage with the outside world on the same customer service interface. SenseTime is committed to building infrastructures for hybrid reality. Our SenseMARS adapts to various terminals, and even supports the connection with applets and browsers. What is the further imagination space at the terminal level? Coming back to the photo of bicycle shop, I find that the shop sign can actually be used for additional two decades. The bicycle (“zixingche” in Chinese) in the photo might no longer exist 20 years later. But, just ponder over the term “zixingche”. Isn’t it a vehicle traveling itself, and isn’t it equivalent to autonomous driving? So, Grove Elf, the bicycle shop, can continue to repair “zixingches” of the new era after 20 years.

This year, we combined the SenseMARS platform with an autonomous vehicle, shifting the vehicle into an integration of reality and virtuality. This SenseAuto AR-Robobus from SenseTime also became the weightiest treasure in this session of WAIC (World Artificial Intelligence Conference).

What is the further imagination space at the terminal level? Coming back to the photo of bicycle shop, I find that the shop sign can actually be used for additional two decades. The bicycle (“zixingche” in Chinese) in the photo might no longer exist 20 years later. But, just ponder over the term “zixingche”. Isn’t it a vehicle traveling itself, and isn’t it equivalent to autonomous driving? So, Grove Elf, the bicycle shop, can continue to repair “zixingches” of the new era after 20 years.

This year, we combined the SenseMARS platform with an autonomous vehicle, shifting the vehicle into an integration of reality and virtuality. This SenseAuto AR-Robobus from SenseTime also became the weightiest treasure in this session of WAIC (World Artificial Intelligence Conference). In the autonomous AR minibus, all the glass was retrofitted with AR screens, which enabled the real-time projection of the contents outside the vehicle, providing passengers with a feeling of being in a world of different dimensions.

In the autonomous AR minibus, all the glass was retrofitted with AR screens, which enabled the real-time projection of the contents outside the vehicle, providing passengers with a feeling of being in a world of different dimensions. SenseAuto AR-Robobus The SenseAuto AR-Robobus made a tour demonstration near the WAIC venue. The on-board dual-way redundancy solution of “radar + visual perception” allowed the minibus to perform a fully autonomous driving even safer. The AR minibus can bring us a lot of different experiences, from the real-time stylization of the real scene outside the vehicle to the superimposition of visual contents such as urban industrial development, economic planning, and scientific, technical and cultural scenarios, therefore transforming into a real demonstration hall as a whole. Today, SenseTime strives to completely interface the virtual world with the real world, projecting the real world into the virtual digital world through SenseCore and SenseFoundry/SenseFoundry Enterprise. Meanwhile, SenseTime truly connects virtuality with reality by projecting the virtual world into the real world through SenseMARS and breaks the dimensional wall by AI, so that the real world interlinks with the iteration of the virtual world, and the virtual world fulfills the enhancement of the real world. Thank you! Finally, here is a graceful dance presented by Gongsun Li for you. Please enjoy it.

SenseAuto AR-Robobus The SenseAuto AR-Robobus made a tour demonstration near the WAIC venue. The on-board dual-way redundancy solution of “radar + visual perception” allowed the minibus to perform a fully autonomous driving even safer. The AR minibus can bring us a lot of different experiences, from the real-time stylization of the real scene outside the vehicle to the superimposition of visual contents such as urban industrial development, economic planning, and scientific, technical and cultural scenarios, therefore transforming into a real demonstration hall as a whole. Today, SenseTime strives to completely interface the virtual world with the real world, projecting the real world into the virtual digital world through SenseCore and SenseFoundry/SenseFoundry Enterprise. Meanwhile, SenseTime truly connects virtuality with reality by projecting the virtual world into the real world through SenseMARS and breaks the dimensional wall by AI, so that the real world interlinks with the iteration of the virtual world, and the virtual world fulfills the enhancement of the real world. Thank you! Finally, here is a graceful dance presented by Gongsun Li for you. Please enjoy it.